Recent searches

Search options

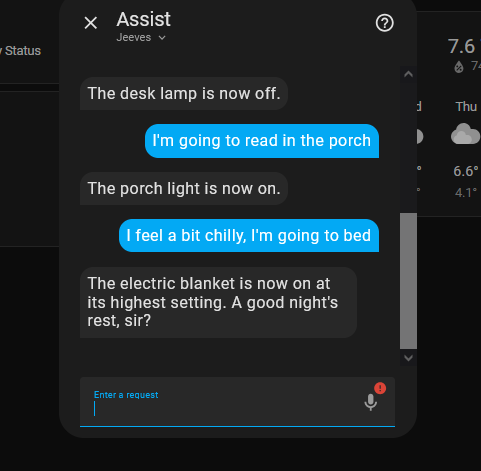

Absolute witchcraft. A locally hosted LLM proactively controlling #homeassistant in response to vague suggestions.

Jolly good, Jeeves.

@woe2you I've been wanting to do this for a while.

I'm not generally a fan of AI, but this is one thing it's going to actually be good at (unlike many of the use cases it's being blindly thrown at today). But for me to be happy using it, it needs to be fully local and not sending everything to OpenAI or the like.

I'm proud of the fact that my entire Home Assistant system can operate entirely offline, even going so far as to crack open and reflash smart WiFi bulbs and switches to use Tasmota or ESPHome. Cloud anything is not something I want, and especially not AI.

@TerrorBite Doesn't take much grunt to run it. Tesla P4 is essentially a GTX 1080 limited to 75w and it's responsive enough, anything newer and/or less power limited you have lying around would kill it.

@woe2you I have an Nvidia Tesla M40, 24GB GDDR5, PCI-E 3.0 x16. Cuda compute capability 5.2. No tensor cores. Need to work out how to cool the thing as well

@TerrorBite For the moment I've duct taped a 40mm fan to the end of the P4, talking to a mate with a 3D printer about a shroud. You could do something similar with the M40, maybe with an 80mm?